FDA's 2025 Cybersecurity Guidance: What’s Changed and How to Master the New Testing Requirements

The landscape of medical device regulation has fundamentally shifted. With the release of its final 2025 guidance on cybersecurity, the U.S. Food and Drug Administration (FDA) has solidified a new, more rigorous framework for ensuring the safety and security of connected medical devices. This document moves far beyond previous recommendations, establishing legally-binding requirements that manufacturers must meet.

This article breaks down the critical changes introduced in the final guidance compared to the 2022 draft and provides a detailed walkthrough of the cybersecurity testing activities now needed to meet these new regulatory demands.

The New Landscape – Key Differences Between the 2025 and 2022 Guidance

The final guidance is not just an update; it's a realignment driven by new legislation that gives the FDA significant new authority.

1. The Game-Changer: FDORA and Legally-Binding Requirements

The most critical update is the integration of the Food and Drug Omnibus Reform Act of 2022 (FDORA), which added Section 524B to the FD&C Act. The 2025 Final Guidance introduces the legal term "cyber device," defined as a device with software that can connect to the internet and has characteristics vulnerable to cyber threats. For these devices, submitting cybersecurity information is now a legal requirement for premarket applications. This is a stark contrast to the 2022 Draft Guidance, which predates FDORA and was based on general recommendations.

2. The SBOM: From Recommended Best Practice to Legal Mandate

The status of the Software Bill of Materials (SBOM) has been officially elevated. In the 2025 Final Guidance, an SBOM is now required by law for all cyber devices under Section 524B(b)(3). The guidance specifies that the SBOM should be machine-readable and align with NTIA minimum standards.

3. A Sharper Focus on Risk and Postmarket Plans

The final guidance refines the approach to risk management and clarifies postmarket obligations. It provides a more detailed distinction between security risk management (focused on exploitability) and safety risk management (focused on probability). It also renames postmarket plans to "Cybersecurity Management Plans" to align with the legal language in FDORA and recommends specific TPLC metrics like patch timelines.

A Deep Dive into Testing – Meeting the New Regulatory Standard

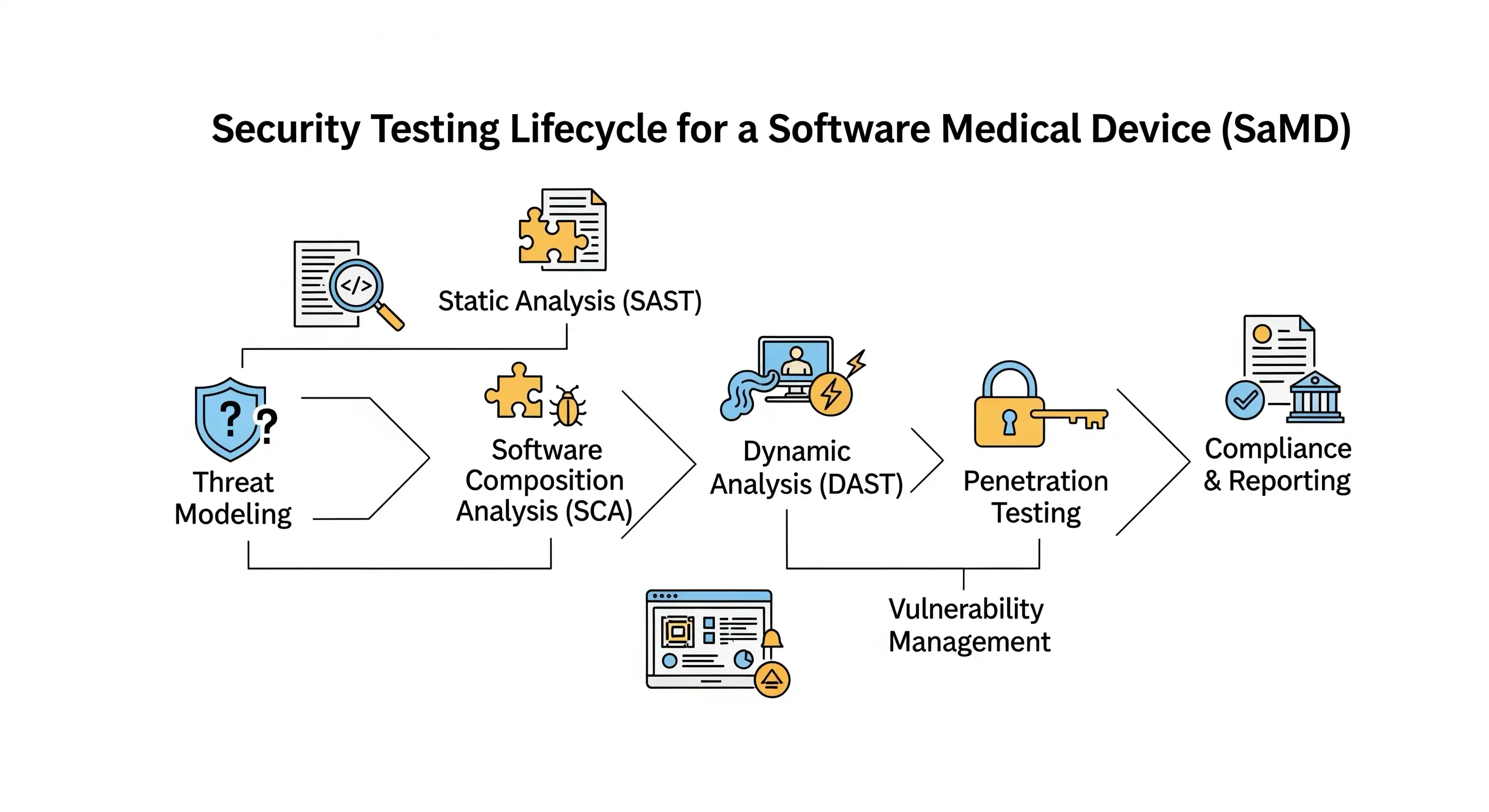

With new legal weight behind cybersecurity submissions, testing is the primary means of generating the required evidence. While the list of recommended tests is largely consistent between the two guidance documents, their regulatory implication is now much greater. To meet these expectations, your testing strategy should be comprehensive, covering four key areas to provide robust evidence of security.

Pillar 1: Security Requirements Testing

The FDA guidance states that you must provide evidence that each security design requirement was implemented successfully. This is the bedrock of your testing documentation. It means you cannot simply state that a security feature exists; you must prove, with clear and traceable evidence, that it has been built correctly and works as intended under test conditions.

Providing "Direct Evidence" via a Traceability Matrix

One of the most effective ways to provide this evidence is through a Requirements Traceability Matrix. This document creates an explicit, bidirectional link between your security requirements, your design specifications, your test cases, and your test results. To illustrate, we will use a fictional device: the Sentia-Cardia™ Mobile Monitoring System, a prescription-only Software as a Medical Device (SaMD) for remote cardiac patient monitoring.

Simplified Traceability Matrix for the Sentia-Cardia™ system:

| Requirement ID | Security Requirement | Design Specification | Test Case ID | Test Case Summary | Test Result | Link to Test Evidence |

|---|---|---|---|---|---|---|

| REQ-SEC-001 | The Sentia-Cardia™ Patient App shall encrypt all biomarker data before transmission to the cloud backend using an industry-standard, cryptographically strong algorithm. | DS-045: "Data Transmission Module" specifies use of TLS 1.3 with AES-256-GCM cipher suite. | TC-SEC-001 | Verify that traffic between the edge and cloud is encrypted using TLS 1.3 by inspecting network packets. | PASS | \\server\test_results\TCS-001_PacketCapture.pcap |

| REQ-SEC-002 | The Sentia-Cardia™ Clinical Dashboard shall enforce role-based access control (RBAC) to ensure only authenticated users with the "Clinician" role can view patient data and treatment suggestions. | DS-081: "User Authentication & Authorization Module" defines "Clinician" and "Admin" roles and their associated privileges. | TC-SEC-002-A | Attempt to log in with "Admin" credentials and access the patient data view. Access should be denied. | PASS | \\server\test_results\TCS-002-A_Log.txt |

| TC-SEC-002-B | Log in with "Clinician" credentials and access the patient data view. Access should be granted. | PASS | \\server\test_results\TCS-002-B_Log.txt | |||

| REQ-SEC-003 | The Sentia-Cardia™ Patient App shall ensure the integrity of software updates by verifying a cryptographic signature before installation. | DS-102: "Secure Boot and Update Module" specifies use of RSA-4096 signature verification. | TC-SEC-003 | Attempt to install a software update package that has been intentionally corrupted or has an invalid signature. The installation must fail. | PASS | \\server\test_results\TCS-003_DeviceLog.xml |

Manually managing such a matrix is cumbersome. Tools like Jama Connect, Polarion, or Jira with specific plugins are designed to automate traceability and manage test execution.

Defining and Performing a Boundary Analysis

The FDA guidance defines boundary analysis as "the process of uniquely assigning information resources to an information system, which defines the security boundary for that system". In simpler terms, it's drawing a clear line around what you, the manufacturer, control and are responsible for securing. For the Sentia-Cardia™ system, this means defining the boundary around the Patient App software, the Cloud Platform, and the Clinical Dashboard software, while acknowledging that things like the patient's phone OS, home Wi-Fi, and the hospital's network are outside the boundary. You must then analyze every interface where data crosses this boundary and define the specific security controls at each point.

Pillar 2: Threat Mitigation Testing

This pillar proves you built the right defenses against credible threats, based on a foundational **Threat Model**. Threat modeling is a systematic process where you think like an attacker to find weaknesses in your system before it’s built, often using a framework like STRIDE (Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, Elevation of Privilege).

Extract from a Sample Security Risk Analysis:

| Threat ID | Threat Description (from Threat Model) | Potential Hazard | Control / Mitigation Implemented | Verification Test Case ID |

|---|---|---|---|---|

| TH-004 | An attacker performs a Denial of Service attack by flooding the cloud backend with high-volume network traffic. | Doctors do not receive near real-time alerts about critical patient biomarker changes, leading to a delay in treatment. | The Sentia-Cardia™ Cloud Platform's network infrastructure shall include a load balancer and auto-scaling server resources. The API gateway shall implement rate-limiting to reject excessive requests from a single IP address. | TC-STRESS-001 |

| TH-005 | The wireless connection between the patient's smartphone (Patient App) and their BLE biomarker sensor is lost due to signal interference or the patient walking out of range. | A gap in biomarker data is created, potentially causing an incorrect treatment suggestion or a missed critical event. | The Sentia-Cardia™ Patient App shall detect a loss of BLE connection within 30 seconds. It must locally buffer up to 15 minutes of data from the sensor and automatically attempt to re-establish the connection. It must provide a clear notification to the patient that the connection is lost. | TC-INTER-001 |

Pillar 3: Proactive Vulnerability Hunting

With controls designed to mitigate known threats, the logical next step is to proactively hunt for unknown weaknesses. The FDA expects manufacturers to proactively search for weaknesses before attackers do. This involves a suite of detailed tests designed to uncover a wide range of potential security issues. The outputs of these activities form a significant part of the design verification and validation evidence within your Design History File (DHF).

1. Static and Dynamic Code Analysis (SAST & DAST)

Explanation: SAST is a "white-box" method where automated tools scan your application's source code, byte code, or binary code without running it. It's excellent at finding flaws early, such as SQL injection vulnerabilities, insecure cryptographic practices, or hardcoded secrets. DAST is a "black-box" method where the application is tested while it is running. A DAST tool sends various malicious inputs and observes the responses to identify vulnerabilities like Cross-Site Scripting (XSS) or insecure server configurations.

Fit-for-Use Tools: SAST tools are source code analyzers that integrate directly into a developer's IDE or your CI/CD pipeline. DAST tools include automated web vulnerability scanners or security proxies.

Timing and Ownership: SAST should be run continuously by developers as they write code and automatically as part of every build in the CI/CD pipeline. DAST is typically run later, during the integration and system testing phases. Developers run SAST tools on their own code, while the QA or Security Team typically runs DAST scans. Results are reviewed and signed off by a Security Lead or QA Manager.

DHF Evidence: The final SAST and DAST reports, along with documented assessments of each finding, remediation tickets, and re-test results showing the fix, are all included as design verification evidence in the DHF.

2. Known Vulnerability Scanning & Software Composition Analysis (SCA)

Explanation: Modern applications are built with numerous open-source and third-party libraries. SCA tools first identify every one of these components and their dependencies (creating the foundation of your SBOM) and then check them against public vulnerability databases (like the National Vulnerability Database) to see if any known security flaws exist.

Fit-for-Use Tools: SCA tools that integrate with your software package managers and CI/CD pipeline to provide continuous monitoring of third-party components.

Timing and Ownership: This should be run continuously during development; a dependency scan should be a mandatory part of every build process. The Development Team is responsible for running the scans and updating vulnerable components. The Security Team typically sets the policy (e.g., "no components with a critical or high-severity vulnerability are permitted"). Sign-off is from the Security Lead.

DHF Evidence: The complete SCA report, which serves as a detailed supplement to the SBOM, along with the risk assessments for any vulnerabilities that cannot be immediately patched, are key DHF artifacts.

3. Abuse Case and Malformed Input Testing

Explanation: This type of testing focuses on the application's business logic. Instead of just testing for a crash, testers think like a malicious user. What happens if I try to order a negative quantity of something? What if I submit a doctor's note that is 10 GB in size? It's about testing the logical boundaries of the application.

Fit-for-Use Tools: This is often a manual process, but it's greatly aided by web proxies that allow a tester to intercept and modify the data being sent from an app to its backend.

Timing and Ownership: This should be performed during the system testing and user acceptance testing (UAT) phases. It is performed by the QA team or a specialized security tester, and results are signed off by the QA Manager.

DHF Evidence: The abuse test cases, execution records, and results (including logs or screenshots of how the system correctly handled the abuse case) are included as design validation evidence.

4. Fuzz Testing

Explanation: Fuzzing is a powerful, automated method for finding bugs that humans might miss. It involves throwing massive amounts of semi-random, invalid, and unexpected data at an input field, file parser, or API endpoint to see if the system crashes, hangs, or reveals a memory leak.

Fit-for-Use Tools: Fuzzing frameworks for different programming languages and protocol-specific fuzzers.

Timing and Ownership: Fuzz testing is conducted during integration and system testing, and tests are often run automatically for long periods (hours or even days) to be effective. This is typically owned by a dedicated Security Team or specialized QA engineers due to the complexity of setup and result analysis. Sign-off is from the Security Lead.

DHF Evidence: Fuzz testing reports, details of the inputs that caused any crashes, crash dumps, and the associated bug reports and fixes are all included in the DHF.

5. Attack Surface Analysis

Explanation: This is more of an analytical activity than a test. The goal is to create a complete map of your system's "surface" that is exposed to the outside world. This includes all user interfaces, APIs, network ports and services, and files that can be input or output.

Fit-for-Use Tools: While primarily a manual process of reviewing architecture diagrams, it is supported by tools like network port scanners and API documentation tools.

Timing and Ownership: This should be done early in the design phase and then revisited any time there is a significant change to the device's architecture. It is typically owned by a Security Architect or a Senior Security Engineer. It is reviewed and signed off by the Head of Product Security or senior technical leadership.

DHF Evidence: The Attack Surface Analysis document itself, including diagrams and a list of all identified interfaces, is a key design document within the DHF.

6. Vulnerability Chaining

Explanation: This is a creative, attacker-focused mindset where a single flaw on its own may seem minor. But what if an attacker can use a low-risk information disclosure bug (e.g., leaking a software version number) to enable a second, more serious attack that only works on that specific version? That is a vulnerability chain.

Fit-for-Use Tools: This is an advanced manual activity, almost exclusively performed by experienced penetration testers.

Timing and Ownership: This is conducted during the final penetration testing phase before release. It is performed by an external penetration testing firm or a highly-skilled internal security team (a "red team"). It is signed off by the Head of Security.

DHF Evidence: Any identified vulnerability chains would be documented as critical findings in the final penetration test report, which is a key piece of design validation evidence in the DHF.

Pillar 4: The Real-World Challenge: Penetration Testing

After extensive automated and manual hunting for vulnerabilities, the final challenge is to test your defenses against a simulated real-world attacker. Pillar 4, the penetration test, is arguably the most critical validation activity in the entire security process. The FDA guidance requires a penetration test to "focus on discovering and exploiting security vulnerabilities in the product". The final report is a critical piece of evidence for your premarket submission and must include details on the testers' independence and expertise, the scope, methods, and all findings.

Planning and Preparation: Setting the Stage for Success

A successful penetration test begins long before the first packet is sent. Proper planning ensures the test is efficient, effective, and provides maximum value. This includes defining a clear Scope and establishing Rules of Engagement (RoE) that govern the test, including permitted techniques, testing windows, and emergency contacts.

Internal Audits vs. Independent Penetration Testing

It’s important to understand the difference between internal reviews and the independent test the FDA requires. An in-house "white box" audit is excellent for finding deep flaws but is inherently biased. The FDA requires an independent "black box" penetration test, performed by an external organization with little to no internal knowledge, to simulate a real-world attacker and ensure the assessment is objective.

Understanding Limitations and Why You Never Stop at First Breach

Every penetration test is constrained by time and scope and is not a guarantee that a device is "unhackable." It is poor practice to stop testing once the first breach is achieved. The goal is to identify as many vulnerabilities as possible across the entire attack surface to provide a comprehensive assessment.

Enabling the Testers and Managing the Environment

To get the most out of your investment, the test must be conducted late in the development cycle on a feature-complete "release candidate" in a dedicated, high-fidelity environment that mirrors production. For a black-box test, you should provide the testers with access to the application, valid user credentials for each role, and necessary API documentation.

Handling Results and Contracting for Re-Testing

The work begins when the report arrives. Findings must be formally triaged, risk-assessed, prioritized, and entered into your bug tracking system. It is a best practice to contract for re-testing to verify that fixes are effective and have not introduced new vulnerabilities.

The Importance of a Long-Term Testing Strategy

Penetration testing is not a one-time activity. Regulators expect a mature, ongoing security program. Your security plan should budget for regular penetration tests throughout the product lifecycle and consider rotating your testing firm every 2-3 years to bring in a fresh perspective.

At a Glance: Key Testing Differences

| Aspect | 2022 Draft Guidance | 2025 Final Guidance | Notes |

|---|---|---|---|

| Regulatory Context | Testing was a recommendation to demonstrate a "reasonable assurance of safety and effectiveness." | For "cyber devices," testing is the method to generate evidence to meet legally mandated requirements under FDORA. | The weight of evidence is now much higher. |

| Handling of Findings | Recommended assessing all findings and providing rationales for deferred fixes. | The same recommendation exists, but the rationale for deferring a fix faces higher scrutiny, as it could mean failing to meet a legal standard. | Justification for not fixing a bug must be robust. |

| Post-Market Testing | Recommended testing at "regular intervals (e.g., annually)." | Clarifies that post-market testing should be at regular intervals "commensurate with the risk," adding a risk-based nuance. | Higher risk devices require more frequent testing. |

Conclusion

The FDA’s 2025 final guidance marks a pivotal moment, shifting medical device cybersecurity from a best practice to a legal mandate. For manufacturers, this means that a comprehensive, evidence-based testing program is no longer optional. By understanding the key changes and mastering the four pillars of testing, companies can build a strong foundation for compliance and, most importantly, deliver safe, secure, and effective devices that patients and providers can trust. The complexity of these new requirements can be daunting. For organizations feeling overwhelmed, seeking guidance from specialized consultants with expertise in medical device cybersecurity can be a critical investment to ensure a smoother path to compliance and market access.