Beyond the Hype: Why 80% of AI Projects Fail at the Data Layer

Beyond the Hype: Why 80% of AI Projects Fail at the Data Layer

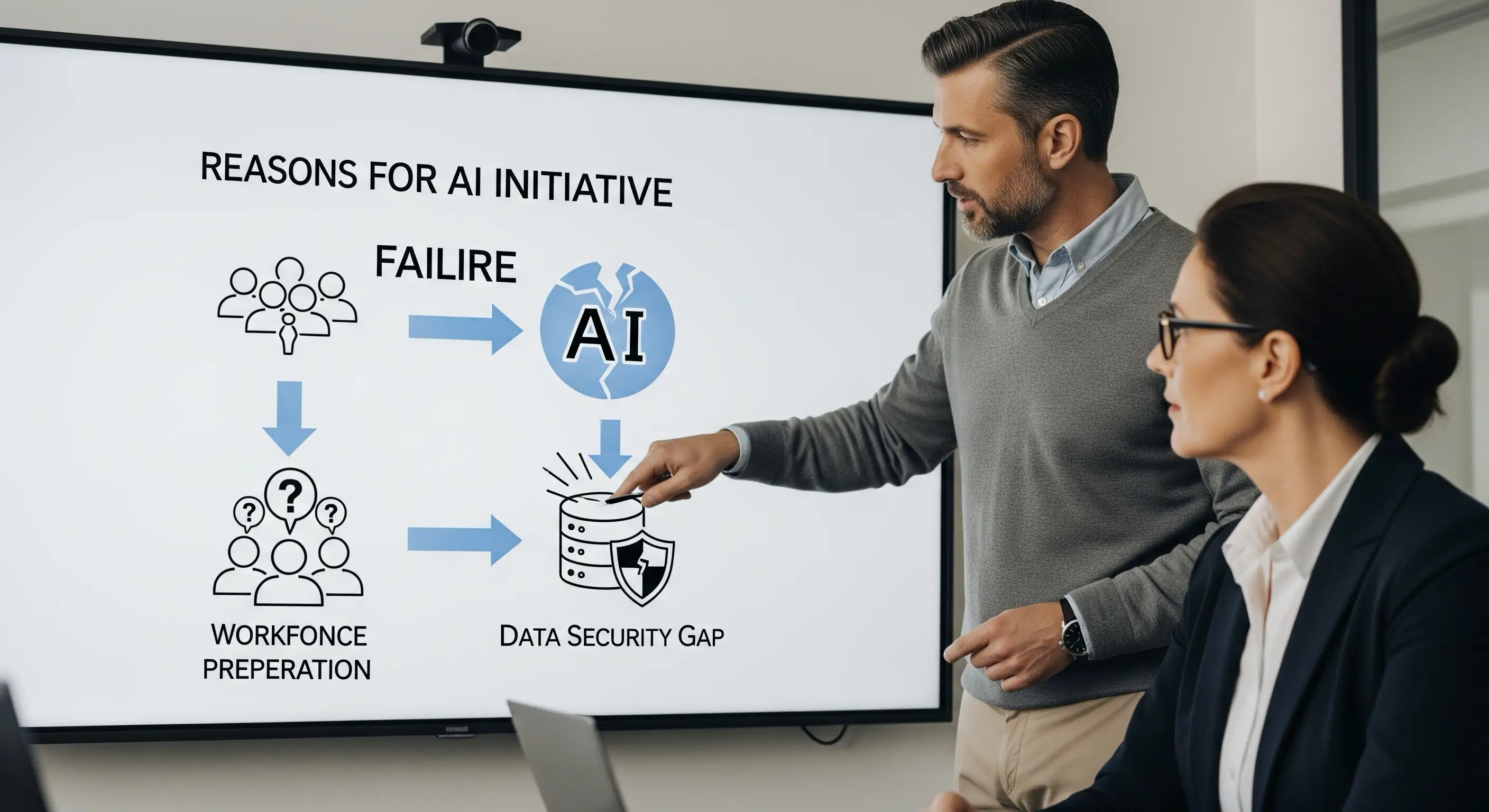

Artificial Intelligence is not failing because of bad ideas. It’s "failing" at enterprise scale because of two fundamental gaps that are often overlooked in the rush to innovate: workforce preparation and, more critically, data security for AI.

According to sobering industry reports from Gartner and MIT, a staggering 70-82% of AI projects are paused or cancelled entirely at the proof-of-concept (POC) or MVP stage. The primary reason for this innovation graveyard is not a lack of ambition or flawed algorithms; it's a lack of readiness at the most foundational level—the data layer. A brilliant model built on a weak data foundation is like a skyscraper built on sand.

To succeed where so many others have stalled, we must simplify our focus and build from the ground up. This article provides a strategic deep dive into the seven critical phases of securing data for AI, highlighting the concrete business risks you ignore at your peril.

The 7 Phases of a Secure AI Data Layer

-

Phase 1: Data Sourcing Security

This initial phase involves a rigorous validation of the origin, ownership, and licensing rights of all data ingested into your systems. It’s about asking critical questions: Where did this data come from? Do we have the legal right to use it for training commercial models? Is it subject to GDPR, or does it contain toxic, biased, or copyrighted information? You simply cannot build scalable, defensible AI with data you don’t own or can’t trace.

Why It Matters: Ignoring this step is a ticking time bomb. Using unlicensed or improperly sourced data can lead to massive fines, reputational damage, and court orders to destroy your models, invalidating millions in R&D investment. -

Phase 2: Data Infrastructure Security

Here, the focus is on ensuring your core data infrastructure—the data warehouses, data lakes, and vector databases that support your AI models—is hardened against threats. This means implementing robust access controls (who can see what data), encryption at rest, and continuous monitoring for anomalous activity. A misconfigured cloud bucket or an over-privileged service account is an open invitation for a breach.

Why It Matters: Unsecured data environments are prime targets for both external hackers and malicious insiders. A breach at this level not only exposes sensitive customer or corporate data but can lead to intellectual property theft or even model poisoning, where an attacker subtly corrupts your training data to make the AI behave in unpredictable and harmful ways. -

Phase 3: Data In-Transit Security

This phase is about protecting data as it moves between systems. Whether it’s flowing from an on-premise server to a cloud GPU for training, or from a third-party vendor’s API to your data lake, every transit point is a potential vulnerability. This requires end-to-end encryption using strong protocols like TLS 1.3 and secure network configurations.

Why It Matters: Intercepted training data is a catastrophic failure. Think of it as deciding whether to ship your company’s trade secrets across town in an armored truck with armed guards or on the back of a bicycle with a sticky note. The method of transport determines the risk, and unsecured data in transit is the easiest target for man-in-the-middle attacks. -

Phase 4: API Security for Foundational Models

Safeguarding the Application Programming Interfaces (APIs) you use to connect with Large Language Models (LLMs) and third-party GenAI platforms (like OpenAI, Anthropic, Google Gemini, etc.) is non-negotiable. This involves strict API key management, rate limiting to prevent abuse, and input/output validation to ensure sensitive data isn't accidentally sent or malicious commands aren't received.

Why It Matters: An unmonitored or unsecured API call can easily leak sensitive internal data—like customer PII or strategic plans—into a public model's training set, making it potentially public knowledge forever. This isn’t just technical debt; it's a direct line to massive reputational and regulatory nightmares. -

Phase 5: Foundational Model Protection

Once you’ve trained your own proprietary model or fine-tuned a public one, it becomes a core business asset that must be defended. This means protecting it from external inference attacks, outright theft of the model weights, or malicious querying designed to expose its underlying data or weaknesses. Techniques like prompt injection (tricking the model into ignoring its safety instructions) and model inversion (reconstructing training data from the model's outputs) are real and growing threats.

Why It Matters: Your enterprise-trained model is high-value IP. You lock your office doors at night and have security guards; you must apply the same security principle to your models. A stolen model is a direct financial loss and a gift to your competitors. -

Phase 6: Incident Response for AI Data Breaches

Things will go wrong. You need predefined, tested protocols for handling AI-specific security incidents. This includes a plan for data breaches, but also for dealing with severe model hallucinations that cause customer harm, or the discovery of deep-seated bias that creates a PR crisis. The plan must clarify who gets notified (Legal, PR, C-Suite), who investigates (Data Science, Cybersecurity), and how damage is technically and reputationally mitigated.

Why It Matters: When an AI-related incident occurs—and it will—a fumbled response can be more damaging than the initial event. Having a clear playbook ensures a swift, coordinated, and effective response that protects customers, contains legal liability, and preserves trust in your brand. -

Phase 7: CI/CD for Models (with Security Hooks)

This final phase involves treating your models like software by implementing continuous integration and delivery (CI/CD) pipelines, often called MLOps. Crucially, these pipelines must be embedded with automated security and governance hooks. This means every time a model is updated, it automatically goes through security scans, bias and fairness checks, and data lineage validation before it can be deployed.

Why It Matters: Shipping models like software means risk cycles come faster. Without automated governance, you create a system where speed trumps safety. By baking security into every deployment sprint, you build a resilient, trustworthy AI factory, not just a series of one-off projects.

Secure the Data, Secure the Future of Your AI

The path from a promising AI proof-of-concept to a scalable, enterprise-grade solution that delivers lasting business value is paved with secure, well-governed data. The hype around AI is real, but the rewards will only go to those who treat the underlying data with the discipline and rigor it requires.